Here you will get an introduction to deep learning.

Deep learning is also known as hierarchical learning. It is used for interpretation of information processing and communication patterns in biological neural system. It also defines relation between different stimuli and associated neural responses in brain. It is a part of machine learning methods with non-task specific algorithms based on learning data representation.

Deep learning can be applied in many fields such as computer vision, speech recognition, image processing, bioinformatics, social network filtering and drug design with the help of its architectures such as deep neural networks and recurrent neural network.

It generates result comparable or in some cases superior to human experts. It uses outpouring of multiple layers of nonlinear processing units for transformation and feature extraction. In this, each successive layer takes output from previous layer as input.

Deep learning levels form hierarchy of concepts and multiple level of representations corresponding to different levels of abstraction. It also uses few form of gradient descent algorithm with back propagation. Multiple layers used in deep learning include hidden layer of neural network and set of propositional formulas.

Deep learning can be in supervised or unsupervised manner. Supervised learning and unsupervised are completely opposite of each other. In supervised learning task of inferring from labeled data and in unsupervised learning task of inferring from unlabeled data. Supervised learning includes classification and unsupervised learning includes pattern analysis.

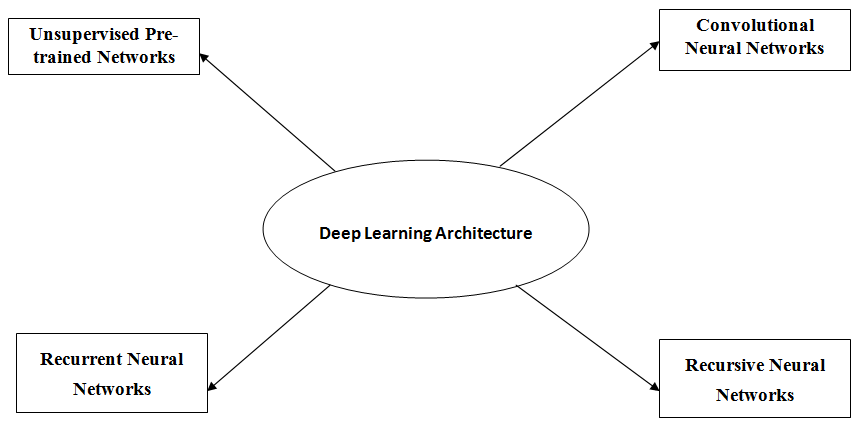

Major Architectures of Deep Learning

Let’s take a review at four major deep learning architectures.

- Unsupervised Pre-trained Networks (UPNs)

- Convolutional Neural Networks (CNNs)

- Recurrent Neural Networks

- Recursive Neural Networks

However the two most important architectures are: CNNs (Convolutional Neural Networks) for image modeling and Long Short-Term Memory (LSTM) Networks (Recurrent Networks) for sequence modeling.

Unsupervised Pre-trained Networks (UPNs)

In this group, there are three specific architectures:

- Autoencoders

- Deep Belief Networks (DBNs)

- Generative Adversarial Networks (GANs)

Autoencoders

Auto-encoder is used to restore input. Therefore it must have an output layer which capable of restoring input. This implies that selection of activation function must be carefully done. Also the normalization of range of input values must be such that the shape of output remains same as input.

Deep Belief Networks (DBNs)

Deep Belief Network (DBN) is a class of deep neural network which is composed of hidden units that involves connections between layers not between units in each layer.

Deep belief network can be seen as a composition of unsupervised networks such as restricted Boltzmann machines where hidden layer of each sub network serves as visible layer for the next layer. Restricted Boltzmann machines is generative energy based model with input layer visible and hidden layer connection with it.

Deep belief network can be seen in many real life applications and services like drug discovery, electroencephalography, etc.

Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) used in unsupervised machine learning. It is a class of artificial intelligence algorithms. It implemented by two neural network system debating with each other in a zero-sum game frame work.

Zero-sum game is mathematical solution of a situation in which few participants gains and few losses. Each gain and loss of utility is equally balanced so that when they are sum up the result will be zero. Total gains of participants is added and total losses are subtracted and there some makes result to zero.

Convolutional Neural Networks (CNNs)

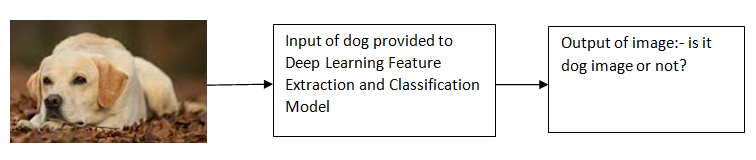

Convolutional neural network is a class of deep learning with feed forward artificial neural network that is applied to analyze visual image.

In convolutional neural network, the multilayer perceptron varies to attempt minimal processing. This multilayer perceptron is called space invariant artificial neural network. The connectivity pattern between neurons is similar to organization of animal biological process

The aim of convolutional neural network is to learn higher order features in data with the help of convolutions. They are used in object recognition, also used to identify faces, street signs and others aspects used to visualize data. Convolutional neural networks are also used in analyzing word as discrete textual units by overlapping text analysis with optical character recognition. Image recognition by convolutional neural network is only reason why world is attracted towards power pf deep learning.

Convolutional neural networks are used in many applications like image and video recognition, natural language processing and recommender system.

Recurrent Neural Networks

Recurrent Neural Networks is used for parallel and sequential computation it is used to compute each and every thing similar to traditional computer. Recurrent neural network works similar to human brain, it is a large feedback network of connected neurons that can translate a input stream into a sequence of motor outputs. A recurrent neural network (RNN) use their internal memory to process sequencing of inputs. In recurrent neural network, connections between units form a directed cycle. Recurrent neural network model each vector from sequence of input stream vectors one at time. This allows the network to retain its state during modeling of each input vector across the window of input vectors.

Recurrent neural network is like network of neuron nodes where each node is directly connected to other node. Each node has real valued activation that varies with time. Each connection in RNNs has real value weight that can be modified. Neuron nodes are either input nodes which receives data from outside the network, output nodes which yields results or hidden nodes which are used to modify data enrooting from input to output.

Applications of Recurrent Neural Networks include:

- Robot control

- Time series predictions

- Rhythm learning

- Music composition

- Grammar learning

- Handwriting recognition

- Human action recognition

Recursive Neural Networks

A Recursive Neural Network architecture is similar to deep neural network. It consist of a shared-weight matrix and a binary tree structure that allows the recursive network to learn varying sequences of words or parts of an image. It is created by applying similar set of weight recursively over the structure so that it can produce a structured prediction over varied size of input structure. It give scalar prediction by traversing the structure in topological order. Recursive neural network can be used as a scene parser. Recursive Neural Networks also deal with variable length input similar to recurrent neural network.

Recursive Neural Networks use backpropagation through structure (BPTS), it is variation of backpropagation. Recursive neural network operate on any hierarchical structure that combine child representation into parent representation. Recursive neural networks are used in learning sequence and in natural language processing. It is also used for phrase and sentence representations based on word embedding

Applications of Deep Learning

- Automatic speech recognition

- Image recognition

- Visual Art Processing

- Natural language processing

- Drug discovery and toxicology

- Customer relationship management

- Recommendation systems

- Bioinformatics

- Mobile Advertising

Comment below if you have any doubts related to above introduction to deep learning.

The post Introduction to Deep Learning appeared first on The Crazy Programmer.

from The Crazy Programmer https://www.thecrazyprogrammer.com/2017/12/introduction-to-deep-learning.html

Comments

Post a Comment